Webapp Blue-Green Deployment Without Breaking Sessions/With Fallback With HAProxy

Use case: Deploy a new version of a webapp so that all new users are sent to the new

version while users with open sessions continue using the previous version

(so that they don't loose their precious session state). Users of the new version

can explicitely ask for the previous version in the case that it doesn't work as expected and vice versa.

Benefits: Get new features to users that need them as soon as possible without affecting anybody negatively and without risking that a defect will prevent users from achieving their goal (thanks to being able to fall back to the previous version).

An alternative to sticky sessions would be to use an external, shared session store (for example Redis). But the overhead and complexity of that wasn't worth the gains in this case.

Notice that even though I run both HAProxy and the instances on the same machine, they could also live on different ones.

There are actually two solutions. The first one, described here, is simpler, but clients will experience a downtime of ~ 5 sec when an instance actually really is not available (f.ex. crashes). The other solution doesn't suffer from this drawback but requires the ability to reach the instance through two different ports (or perhaps hostnames) for the health checks. Both solutions are well described in HAProxy's "architecture" document: "4.1 Soft-stop using a file on the servers" (though we will use a dynamic servlet instead of a static file) and "4.2 Soft-stop using backup servers".

Now go to GitHub to see the proof of concept implementation and read the two sections, 4.1 and 4.2, referenced above. The implementation uses a simple Java webapp running on Tomcat and can be run either on a Linux machine or via Vagrant. Follow the instructions and information in the readme.

The webapp needs to implement support for settable health checks. In our case, the servlet enables POSTs to

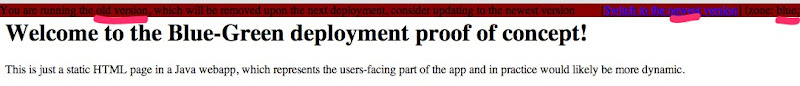

PoC - old version of the app

PoC - old version of the app

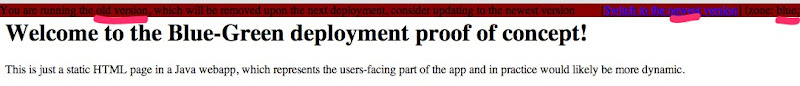

A new version of the app in the green zone (localhost:8080, in a new browser):

PoC - the new version of the app

PoC - the new version of the app

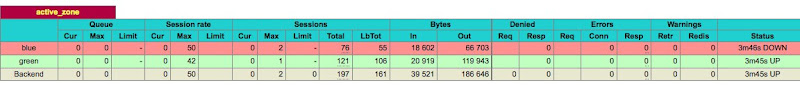

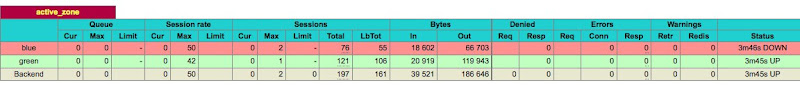

A part of HAProxy's stats page reporting about the zones (localhost:8081):

PoC - HAProxy's stats page with the old version (blue) pretending to be down so that new requests go to green

PoC - HAProxy's stats page with the old version (blue) pretending to be down so that new requests go to green

Benefits: Get new features to users that need them as soon as possible without affecting anybody negatively and without risking that a defect will prevent users from achieving their goal (thanks to being able to fall back to the previous version).

An alternative to sticky sessions would be to use an external, shared session store (for example Redis). But the overhead and complexity of that wasn't worth the gains in this case.

Implementation

Configuration

We will run two instances of our service, on two different ports, with HAProxy in front of them. We will use HAPRoxy's health checks to trick it into believing that one of the instances is partially unwell. HAProxy will thus send it only the existing sessions, while sending all new ones to the other, "fully healthy," instance.Notice that even though I run both HAProxy and the instances on the same machine, they could also live on different ones.

There are actually two solutions. The first one, described here, is simpler, but clients will experience a downtime of ~ 5 sec when an instance actually really is not available (f.ex. crashes). The other solution doesn't suffer from this drawback but requires the ability to reach the instance through two different ports (or perhaps hostnames) for the health checks. Both solutions are well described in HAProxy's "architecture" document: "4.1 Soft-stop using a file on the servers" (though we will use a dynamic servlet instead of a static file) and "4.2 Soft-stop using backup servers".

Now go to GitHub to see the proof of concept implementation and read the two sections, 4.1 and 4.2, referenced above. The implementation uses a simple Java webapp running on Tomcat and can be run either on a Linux machine or via Vagrant. Follow the instructions and information in the readme.

The webapp needs to implement support for settable health checks. In our case, the servlet enables POSTs to

/health/(enable|disable) to set the availability status and HEAD requests to /health that return either OK or SERVICE UNAVAILABLE based on the status.

Deployment

The deployment is simple:- Figure out which zone - blue or green - is the current and the previous one - f.ex. by having recorded it into a file.

- (Optional) Check that the previous instance has no active sessions and take it down. (Not implemented in the PoC.)

- Deploy over the previous instance (copy the new binary from a remote repository, S3 or whatever)

- Start the newly deployed instance and verify that it is well, record its zone as the active one.

- Switch over: Tell the old instance to start reporting to HAProxy that it is unavailable and the new one to report itself as healthy.

- Drink a cocktail and enjoy.

Let me see!

An old version of the app running in the blue zone (localhost:8080, reloaded in a browser tab where it was opened before the new deployment): PoC - old version of the app

PoC - old version of the appA new version of the app in the green zone (localhost:8080, in a new browser):

PoC - the new version of the app

PoC - the new version of the appA part of HAProxy's stats page reporting about the zones (localhost:8081):

PoC - HAProxy's stats page with the old version (blue) pretending to be down so that new requests go to green

PoC - HAProxy's stats page with the old version (blue) pretending to be down so that new requests go to green